A few weeks ago, the National Institute for Health and Care Excellence (NICE) released a position statement on the use of Artificial Intelligence (AI) in evidence generation. Their announcement came at a time when our own team was discussing a best-practices approach to AI within the context of our work. We were delighted to see strong similarities between our conversations and the NICE guidelines. This was especially reflected in the core belief that the use of these sophisticated tools should be grounded in augmentation not replacement of human involvement.

Our statisticians are no strangers to the use of automation to create efficiencies. However, there are deeper implications surrounding the adoption of advanced AI software that inform our decisions on if, how and when to use AI tools such as machine learning (ML) and large language models (LLM) like ChatGPT.

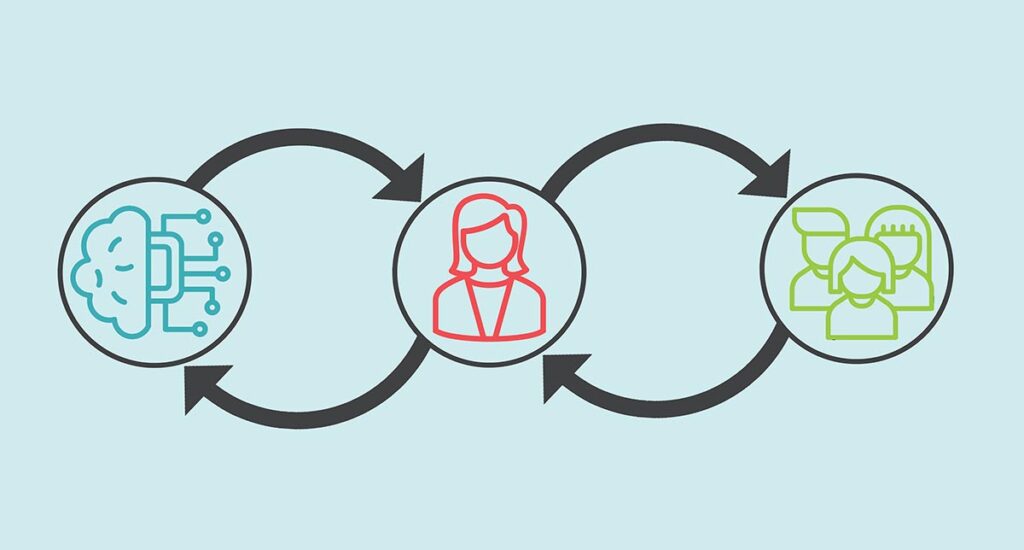

Automated models can be handy for certain tasks such as creating formatted tables or summarizing technical data, but they can’t tell the narrative of a manuscript or interpret the significance of that data. The essence of our work requires a human in the loop approach.

Just because the technology is intelligent, doesn’t mean it’s trustworthy or infallible. We’ve all heard about ‘hallucinations’ where the LLMs generate an answer that seems to make sense, but in fact is completely false. That’s because there are limitations posed by the way these models “train” on various data sources that may contain inherent errors, or be misinterpreted.

Beyond scientific accuracy, we have concerns around transparency and privacy, equity relating to underrepresented groups and resulting bias, as well as regulatory and even environmental issues. AI functions require a substantial amount of energy to run and cool those massive computers. This point is particularly relevant as we place environmental sustainability at the forefront of business decisions whenever possible.

With all of that said, we are excited about AI technology and its future potential. Our analytic teams are monitoring advancements in the field, including the development of domain-specific models that will be trained on HEOR guidance and regulatory frameworks. They are also on top of newer statistical methods for dashboards, interactive models and automating processes that don’t involve AI.

We will always use the right tools for each assignment and, where it makes sense, that will include AI. The humans on the team are the ones who lead each stage of every project with the ability to think critically, strategize and draw evidence-based conclusions.

At Broadstreet, we value human intelligence above the artificial kind.

If you are interested in reading more, we have links to some useful resources below, including Broadstreet’s own position statement on AI, which we invite you to read.

Resources

Use of AI in evidence generation: NICE Position Statement (August 2024)

Generative AI for Health Technology Assessment: Opportunities, Challenges, and Policy Considerations (arXiv:2407.11054, R. Fleurence, et al, August 2024)

Machine Learning Methods in Health Economics and Outcomes Research – the PALISADE Checklist: A Good Practices Report of an ISPOR Task Force (Padula, William V. et al. Value in Health, Volume 25, Issue 7, 1063 – 1080)

Broadstreet HEOR Position Statement on AI (September 2024)